- #Conduktor kafka series accelsawersventurebeat how to#

- #Conduktor kafka series accelsawersventurebeat software#

- #Conduktor kafka series accelsawersventurebeat free#

#Conduktor kafka series accelsawersventurebeat free#

Kafka is permitted to delete old data to free up storage space if there are records that are older than the designated retention time or if the space bound for a partition is surpassed. Regular topics can have a retention time or a space bound configured. Regular and compressed topics are supported by Kafka. Kafka now enables transactional writes, which use the Streams API to deliver exactly-once stream processing, starting with the 0.11.0.0 release. by allowing it to deliver enormous streams of messages in a fault-tolerant manner. This architecture enables Kafka to replace some of the traditional messaging systems like Java Message Service (JMS), Advanced Message Queuing Protocol (AMQP), etc. Partitions are also copied across numerous brokers. The partitions of all topics are distributed throughout the cluster nodes, and Kafka runs on a cluster of one or more servers (referred to as brokers). Other external stream processing platforms including Apache Apex, Apache Beam, Apache Flink, Apache Spark, Apache Storm, and Apache NiFi can be used in conjunction with Apache Kafka. Kafka provides the Streams API for stream processing, enabling developers to create Java programs that read data from Kafka and return results to Kafka. Partition messages can be read by “consumers,” or other processes. The data can be divided up into many “partitions” inside various “themes.” Messages are indexed and saved combined with a date and rigorously arranged inside a partition according to their offsets (the position of a message within a partition). Key-value messages that originate from arbitrary numbers of processes known as producers are stored in Kafka. In addition, cloud providers don’t generally make their products interoperable. With many large enterprises moving toward multi-cloud environments and hybrid cloud deployments, it is crucial for companies to standardize their real-time streaming data infrastructure stack. However, with increasing competition from cloud providers and emerging open source projects, Confluent has a distinct advantage. Today, Kafka-based streaming data solutions are available through cloud providers, and Apache Kafka is the most popular open source solution. While Kafka has grown rapidly over the last decade, the platform’s developers and community have been working to make it more user-friendly. Because of the larger network packets, larger sequential disk operations, and contiguous memory blocks that result from this, Kafka is able to convert a bursty stream of errant message writes into linear writes. Kafka relies on a “message set” abstraction, which naturally groups messages together to lessen the overhead of the network roundtrip, and uses a binary TCP-based protocol that is optimized for efficiency. The Kafka Streams libraries are available for stream processing applications, and Kafka can connect to external systems (for data import/export) via Kafka Connect. A unified, high-throughput, low-latency platform for handling real-time data feeds is what the project seeks to provide.

#Conduktor kafka series accelsawersventurebeat software#

It is a Java and Scala-based open-source system created by the Apache Software Foundation.

Stephane finishes the course with a look at some advanced topics, like log cleanup policies and Large messages in Kafka.Apache A distributed event store and stream processing platform is called Kafka. Instructor Stephane Maarek includes practical use cases and examples, such as consuming data from sources like Wikipedia and Elasticsearch, that feature real-world architecture and production deployments.

#Conduktor kafka series accelsawersventurebeat how to#

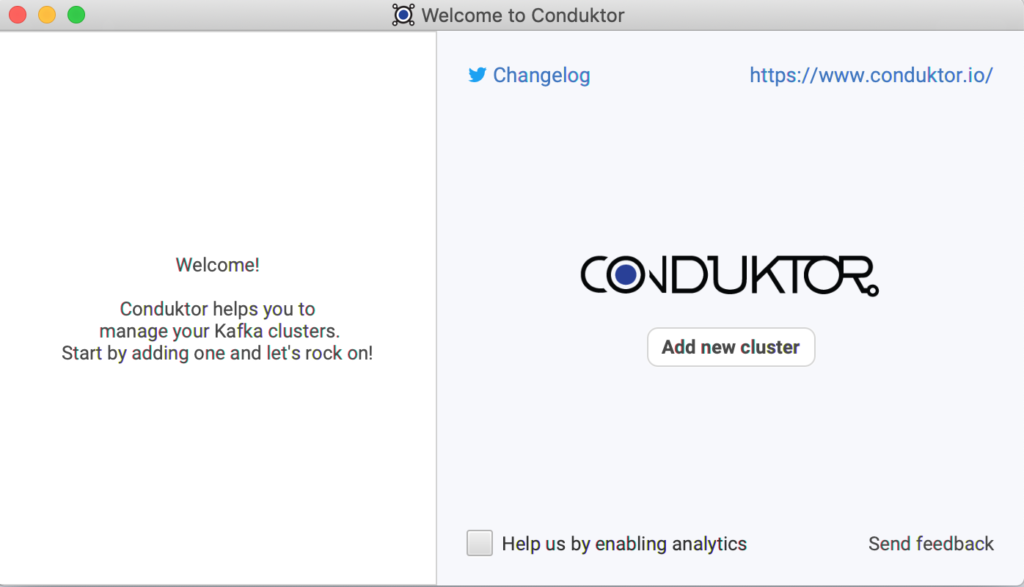

Learn how to start a personal Kafka cluster on Mac, Windows, or Linux master fundamental concepts including topics, partitions, brokers, producers, and consumers and start writing, storing, and reading data with producers, topics, and consumers. This training course helps you get started with all the fundamental Kafka operations, explore the Kafka CLI and APIs, and perform key tasks like building your own producers and consumers. It helps you move your data where you need it, in real time, reducing the headaches that come with integrations between multiple source and target systems.

Kafka is the leading open-source, enterprise-scale data streaming technology.

0 kommentar(er)

0 kommentar(er)